MolFun

High-performance acceleration for molecular modeling

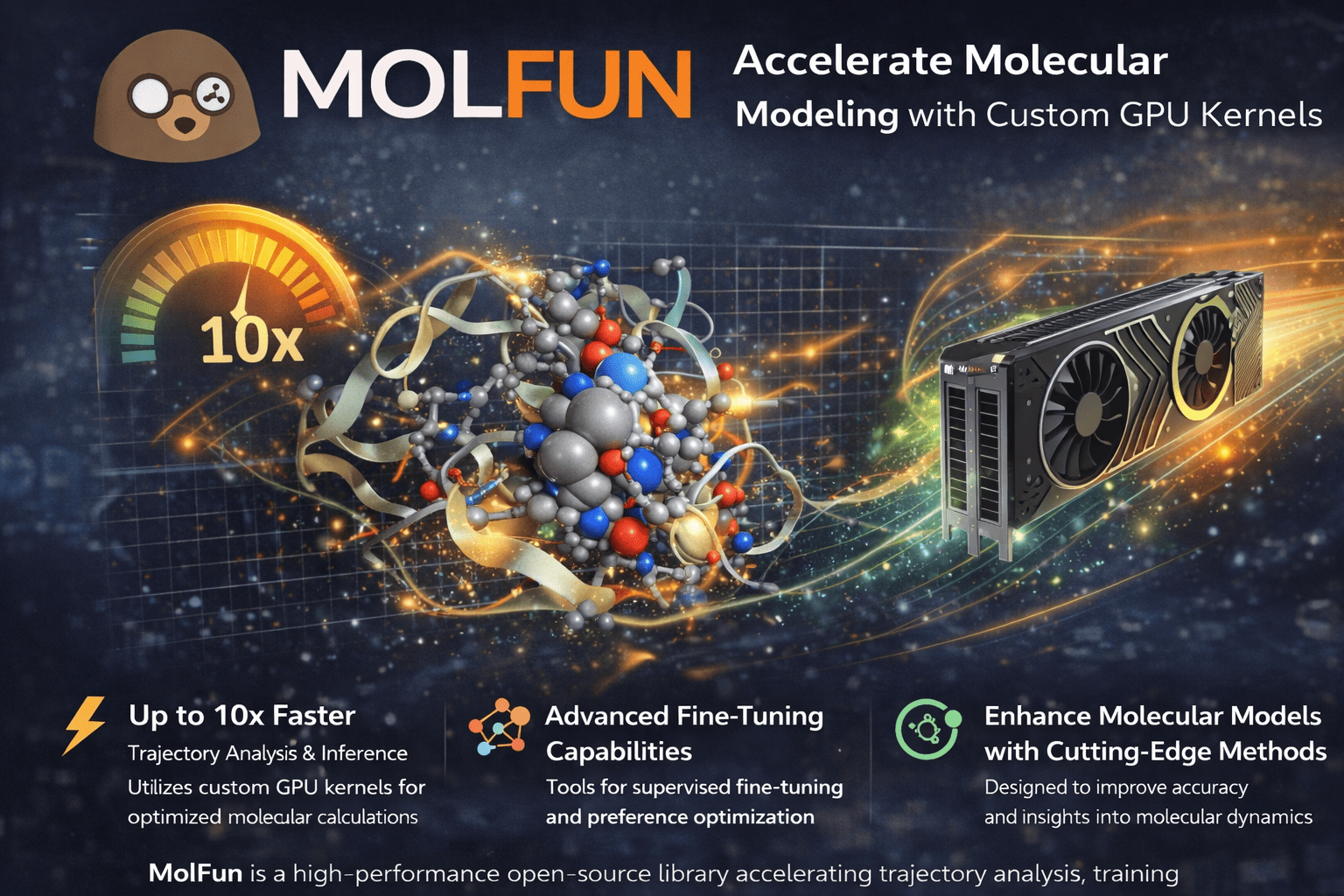

MolFun is a high-performance library designed to accelerate trajectory analysis, training, and inference for molecular modeling models like AlphaFold, Boltz, Protenix, and more.

At its core, MolFun provides custom GPU kernels optimized for molecular calculations, enabling researchers to process trajectories and run model inference up to 10x faster than standard implementations.

Beyond raw performance, MolFun offers comprehensive tools for supervised fine-tuning and direct preference optimization, allowing models to understand molecular dynamics, poses, and conformational changes with unprecedented accuracy.

The library provides:

- •GPU-optimized kernels for trajectory analysis calculations, reducing computation time by orders of magnitude.

- •Accelerated inference pipelines for molecular modeling models, seamlessly integrated with PyTorch workflows.

- •Training acceleration tools with optimized gradient computations and efficient memory management.

- •Supervised fine-tuning (SFT) and direct preference optimization (DPO) tools for teaching models to understand molecular dynamics, poses, and structural changes.

MolFun is not just about speed.

It's about making molecular modeling more accessible and efficient, enabling researchers to focus on discovery rather than waiting for computations to complete.

By combining low-level GPU optimizations with high-level training tools, MolFun bridges the gap between raw computational power and practical molecular understanding.

A library where molecular calculations are not just faster, but smarter.

Features

Comprehensive acceleration and training tools for molecular modeling

GPU-Optimized Trajectory Analysis Kernels

Custom CUDA kernels designed to accelerate trajectory analysis calculations. Process molecular dynamics trajectories up to 10x faster with optimized memory access patterns and parallel computation.

High-Performance Model Inference

Accelerate inference for molecular modeling models like AlphaFold, Boltz, Protenix, and more. Optimized tensor operations and memory management for production workloads.

Efficient Training Acceleration

Speed up training loops for molecular LLMs with optimized gradient computations, mixed precision support, and efficient data loading pipelines.

Supervised Fine-Tuning (SFT) Tools

Comprehensive tools for fine-tuning molecular models to understand dynamics, poses, and conformational changes. Streamlined workflows for domain-specific adaptation.

Direct Preference Optimization (DPO)

Advanced DPO implementations for aligning molecular models with expert preferences. Optimize model behavior for specific molecular understanding tasks.

Seamless PyTorch Integration

Native PyTorch compatibility with zero-copy operations. Drop-in replacements for standard operations with automatic GPU acceleration.

RMSD with Superposition (Kabsch Alignment)

Benchmark: 2501 frames, 3891 atoms (full protein), batch RMSD calculation with optimal alignment.

| Method | Time | per Frame | vs Molfun |

|---|---|---|---|

| Molfun (Triton GPU) | 0.71 ms | 0.28 µs | — |

| PyTorch GPU (vectorized) | 2.21 ms | 0.88 µs | 3.1× slower |

| MDTraj (C/Cython CPU) | 14.69 ms | 5.87 µs | 20.8× slower |

| MDAnalysis (CPU) | 565.98 ms | 226.30 µs | 801× slower |

Key insights:

- GPU vs GPU (fair comparison): Molfun's Triton kernels are 3.1× faster than naive PyTorch GPU implementation

- GPU vs CPU: Molfun is 21× faster than MDTraj (optimized C) and 801× faster than MDAnalysis

Contact Maps (Batch)

Benchmark: 2501 frames, 254 atoms (Cα only), cutoff = 8.0 Å.

| Method | Time | per Frame | vs Molfun |

|---|---|---|---|

| Molfun (Triton GPU) | 66 ms | 0.026 ms | — |

| PyTorch GPU (vectorized) | 71 ms | 0.028 ms | 1.1× slower |

| MDTraj (CPU) | 2,432 ms | 0.97 ms | 37× slower |

| MDAnalysis (CPU) | 3,163 ms | 1.26 ms | 48× slower |

Additional benefit: Molfun uses bit-packed storage (8× less memory than boolean matrices).

All benchmarks run on NVIDIA GPU with CUDA 12+. Results may vary depending on hardware.